What is Docker?

A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

Virtual Machine World

Container World

Docker run

Hello World

$ docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Slightly more useful Hello World

# Remember the -it - it means "I want a terminal"

$ docker run -it python:3.8-slim

Python 3.8.3 (default, Jun 9 2020, 17:49:41)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> print("hello world")

hello world

Terminology

Image

A Blueprint for a docker container

Container

A running instance of an Image

Setup a database

docker run -p 5432:5432 postgres

Error: Database is uninitialized and superuser password is not specified.

You must specify POSTGRES_PASSWORD to a non-empty value for the

superuser. For example, "-e POSTGRES_PASSWORD=password" on "docker run".

You may also use "POSTGRES_HOST_AUTH_METHOD=trust" to allow all

connections without a password. This is *not* recommended.

See PostgreSQL documentation about "trust":

https://www.postgresql.org/docs/current/auth-trust.html

Passing environment variables

- Modern software is often configured with environment variables

- We can pass them into our docker container with the

-e

docker run -p 5432:5432 -e POSTGRES_PASSWORD=postgres postgres

...

2020-08-12 13:27:45.275 UTC [1] LOG: starting PostgreSQL 12.3 (Debian 12.3-1.pgdg100+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 8.3.0-6) 8.3.0, 64-bit

2020-08-12 13:27:45.276 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

2020-08-12 13:27:45.276 UTC [1] LOG: listening on IPv6 address "::", port 5432

2020-08-12 13:27:45.277 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2020-08-12 13:27:45.288 UTC [55] LOG: database system was shut down at 2020-08-12 13:27:45 UTC

2020-08-12 13:27:45.292 UTC [1] LOG: database system is ready to accept connections

Port forwarding

- the

-pflag indicates what port to expose to the your system. - The application in the container runs on a port inside the docker network

- That port needs to be exposed to your machine so network traffic is routed inside the container

- We can map whatever port we want from your machine into the container

Let’s have a webserver

# Create a simple webpage

echo "<h1>Hello world</h1>" >> index.html

# Run an nginx container

docker run -d -v ${pwd}/index.html:/usr/share/nginx/html/index.html:ro -p 8080:80 nginx

Volume mounting

We have a file on our computer - index.html that we want inside our container

- Volume mounting means exposing our local files/directories to the container

- The left side of the

:is my machine - The right side is the location inside the container

Docker build

Docker hub

All the images we’ve been using come from Docker hub - a central, open repository for docker images

- These images have all been created by other people

- Some are official images

- You can upload your own images as well

Defining an image

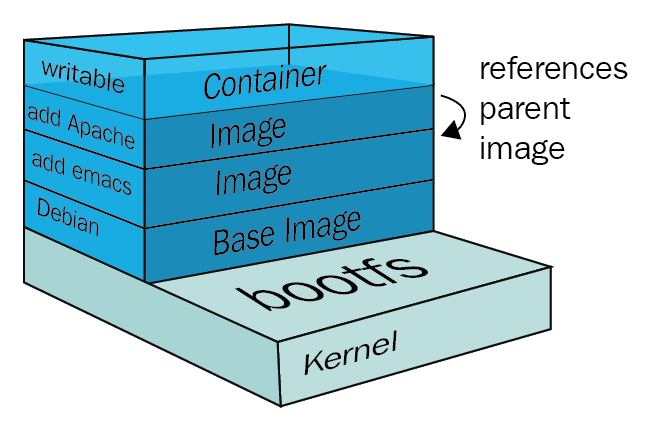

A docker image is defined by a Dockerfile - a step by step definition of how the container should work

A Dockerfile can be simple - let’s remake our nginx server as a Dockerfile

FROM nginx

COPY index.html /usr/share/nginx/html/index.html

Now we can build the Dockerfile and create an image

$ docker build -t test_nginx .

Sending build context to Docker daemon 3.072kB

Step 1/2 : FROM nginx

---> 2622e6cca7eb

Step 2/2 : COPY index.html /usr/share/nginx/html/index.html

---> 01df86f8a47f

Successfully built 01df86f8a47f

Successfully tagged test_nginx:latest

Tagging images

- Just like in git - Docker uses

SHAto keep track of objects - We can name our images by tagging them

- We can also specify versions - like in the python hello-world

We can add a version tag to our previous build

$ docker tag test_nginx:latest test_nginx:0.1.0

$ docker images

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

test_nginx 0.1.0 01df86f8a47f 7 minutes ago 132MB

test_nginx latest 01df86f8a47f 7 minutes ago 132MB

Notice they have the same IMAGE_ID

Anatomy of a Dockerfile

A Dockerfile has a handful of commands to know

FROM

RUN

COPY

LABEL

CMD

ENTRYPOINT

WORKDIR

FROM

What image are we basing our image off of?

Docker images are extensible, so it’s simple to build off of other people’s work

FROM python:3.8.3-slim

RUN

Run a command inside the container at build time - install some dependency, setup some data etc.

RUN pip install psycopg2

COPY

Copy a file from your machine into the container when building

- left side == your computer

- right side == docker container

COPY requirements.txt requirements.txt

LABEL

Labels add metadata to an image that can be used by tooling or looked up with inspect

LABEL maintainer="maintainer@mycompany.org"

CMD

The command that is executed when we do docker run without any arguments

CMD ["flask", "run", "-p", "8080"]

Notice the syntax - this makes it safer to parse

We can override the CMD by passing the command we want to run

$ docker run -it python:3.8-slim /bin/bash

root@2e321b156c7b:/#

ENTRYPOINT

Sometimes we want to run the container with arguments.

If ENTRYPOINT is specified, any arguments passed when doing docker run, they will be passed to the ENTRYPOINT

ENTRYPOINT ["python", "-i"] # docker run arguments will be appended

WORKDIR

Sets the working directory inside the container - the docker version of cd

Useful if you want everything to happen in a subfolder

WORKDIR /app

Exercise

What is it?

- Docker compose coordinates multiple docker containers

In Docker

Imagine we have a python API that needs a Redis cache and a Postgres database. With only docker we would do

docker build webappdocker run webappdocker run redisdocker run postgres- Add them all to the same docker network, so they can talk to each other

- Make sure each one has the correct environment variables to connect to each other

In docker-compose

docker-compose upSeems a bit shorter?

The yaml file

Docker-compose specifies all the configuration necessary in the docker-compose.yaml file

The most important sections

- build

- environment

- ports

- volumes

- image

Building a docker-compose file

We always need to specify a version number to ensure that docker-compose knows if it is compatible

The version numbers can be found here

and is at 3.8

at the time of writing

version: "3.8"

Define services

dockerdeals with one servicedocker-composedeals with multiple, so we need to define each service in its own block

version: "3.8"

services:

service1:

...

service2:

...

build

Let’s add our postgres service from before

version: "3.8"

services:

db:

build:

context: ./db

dockerfile: Dockerfile

And run it

docker-compose up

environment

We want to add the POSTGRES_PASSWORD environment variable to the build - else it complains

version: "3.8"

services:

db:

build:

context: ./db

dockerfile: Dockerfile

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=postgres

Exercise

- Create a new folder nginx

- Create an index.html file in that folder

- Add a

<h1>Hello world</h1>to the index.html - Write a Dockerfile to run nginx and show that file

- Add that service to the yaml file

Solution

version: "3.8"

services:

db:

build:

context: ./db

dockerfile: Dockerfile

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=postgres

nginx:

build:

context: ./nginx

dockerfile: Dockerfile

ports

In the exercise, we added the application - but we can’t actually access our website

We need a port mapping

...

nginx:

build:

context: ./nginx

dockerfile: Dockerfile

ports:

- "8080:80"

Now we can go to localhost:8080 and see our website

volumes

We can also mount files in docker-compose - we can add a “hot reload” to our website.

...

nginx:

build:

context: ./nginx

dockerfile: Dockerfile

ports:

- "8080:80"

volumes:

- "./index.html:/usr/share/nginx/html/index.html"

We can now add a index.html file to the same directory as the docker-compose file and look at the website

Try changing the contents of index.html

image

We can also specify an image to load

Let’s add a redis instance

...

redis:

image: redis

Image replaces build - everything else is the same

networking

Docker-compose automatically puts all the services in the same network

When you are inside a container, all you have to do is refer to the service name instead of the ip

Connecting to postgres

- User

postgres - Password

postgres

import sqlalchemy as sa

engine = sa.create_engine("postgresql://postgres:postgres@postgres:5432")

depends_on

When we are creating multiple services, we can set one to depend on another

Our python app is dependant on the DB being up, so we can add depends_on

...

app:

depends_on:

- db

This will wait until the container has started - not until the application inside the container is ready

Exercise

Write a python application to insert the CSV you downloaded previously into the database

- Make a new folder

app - Take a connection string from an environment variable

- Read the csv

- Insert the data into the database (hint: use psycopg2)

- You might need to install dependencies, you can always use

apt-get install

Exercise

- Write a Dockerfile for the application

- Add it to the docker-compose

Solution - Python app

import os

import psycopg2

import time

import logging

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(message)s")

logger = logging.getLogger("data")

DB_URL = os.environ["DB_URL"]

time.sleep(2)

logger.info("Opening connection to database")

conn = psycopg2.connect(DB_URL)

with open("placement_data_full_class.csv") as f:

logger.info("Opening data file")

next(f)

with conn.cursor() as curs:

logger.info("Copying data")

curs.copy_from(f, table="placement_data", sep=",", null="")

conn.commit()

logger.info("Done copying data")

Solution - docker-compose

app:

build:

context: ./app

dockerfile: Dockerfile

environment:

- DB_URL=postgresql://postgres:postgres@db:5432

depends_on:

- db

Multi-stage builds

Multi-stage builds slim down our builds

- Docker images can get big

docker image ls | grep python

python 3.8 79cc46abd78d 7 days ago 882MB

python 3.8.5-slim 07ea617545cd 2 weeks ago 113MB

python 3.8-slim 9d84edf35a0a 2 months ago 165MB

python 3.7.5 fbf9f709ca9f 9 months ago 917MB

The difference between python:3.8 and python:3.8-slim is 717 mb!

Size matters

Every mb of a Docker image has to be transmitted over the network - multiple times

- Slow startup

- Slow replication

- Storage costs

Why is it so big?

Docker has a concept of layers - each statement in a Dockerfile creates a new layer on top

Slimming down

Since the layers are read-only, we can’t delete anything from a previous layer

Some tips and tricks

- Do many operations in a single layer

- Any deletion of files must happen in the same layer as they are created

- Choose smaller base images

The build context

Did you notice this line?

$ docker build -t test_nginx .

Sending build context to Docker daemon 3.072kB

Docker sends the contents of your as a zip file to the Docker daemon - the thing that actually does docker

If this gets big, it can slow down your builds and make them much bigger - everything that is sent gets included.

The solution: .dockerignore

- If you have large files you don’t need in your build, you can add them to

.dockerignore, much like a.gitignore - They’re not quite the same, so if it’s not doing what you want - look up the docs

Multi-stage builds

Multi-stage builds means passing artifacts between stages in a Dockerfile

In essence, it let’s us start from scratch with a new base image, with added files

An example python build

FROM python:3.8 as build

# Setup a virtualenv we can install into

RUN python -m venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# Install requirements into the virtualenv

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

FROM python:3.8-slim as run

# Copy the virtualenv from the build stage

COPY --from=build /opt/venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

COPY . .

CMD ["python", "run.py"]

Exercise

- Check how big your docker-compose app is (

docker images) - Implement multi-stage in the app

- Build the new images

- Check how big your docker-compose app is now

Exercise

- Build something with the data we have downloaded!

- Only criterion - It should be in a Docker container

Ideas

- Build an ML API

- Build a report generator

- Output a report of the data?

- Build a dashboard

- streamlit

- bokeh

- dash

- panel

- voila

- Build an ETL job

- Process the data and output processed data